There is no uniform international qualification for career counsellors, although it is possible to obtain certification nationally and internationally (e.g., through professional associations) (Savickas, 2019). The title "career counsellor" is not regulated, unlike engineers or psychologists whose professional titles are legally recognized (Savickas, 2019). Depending on the country, "career counsellors" may have a variety of academic backgrounds. In Europe, for instance, a Psychology degree (specifically Educational Psychology or Organizational Psychology) is the most common (Savickas, 2019).

Mendes et al. (2015) indicate that eight percent of the almost 500 professionals questioned identify Career Guidance as the domain of intervention where they perceive most training needs. This need should be better considered, since continuing education is one of the factors that most qualifies a professional (Barros et al., 2019). In this scenario, networking constitutes a good practice. It is easier to raise and offer useful resources through practical research communities, composed of researchers, community leaders, policy makers and career psychologists (Barros et al., 2019; Green et al., 2019). This cooperation will enable mutual guidance for the design, training, delivery and evaluation of career interventions and longer preventive and promotive programmes in the field (Barros et al., 2019; Degn et al., 2018).

As Vocational Psychology continues to evolve, training opportunities in the field will primarily focus on counselling, while also encompassing other mental health services (Blustein et al., 2019). To break down the insularity within Vocational Psychology and expand its intellectual influences, counselling psychologists should collaborate with other professionals to develop training models, courses, and continuing education programmes (Blustein et al., 2019). In line with these considerations, the present study introduces the methodology of an online training programme designed to equip psychologists with the necessary skills and knowledge to effectively implement career counselling interventions.

Online professional training

The COVID-19 pandemic created the largest disruption of education systems in history, affecting all continents (Paudel, 2021; Sokolová et al., 2022). In fact, it is estimated that the closure of schools and other learning spaces affected 94 percent of the world's student population (Paudel, 2021). During the pandemic, many countries adopted isolation measures that forced people to stay at home (Mäkelä et al., 2020). Many services were forced to close their doors and adapt their activities in times of pandemic crisis (Paudel, 2021). To mitigate the direct impact of the virus, technology-based teaching became the most appropriate alternative to keep educational activities functional in many parts of the world (Paudel, 2021). In the training field, these circumstances led the authorities to consider online classes as a replacement for face-to-face training. Not only was this an attempt to counteract the spread of COVID-19, but also ensured the continuation of the training process (Teymori & Fardin, 2020).

While in the past, online training was considered only as an alternative or additional resource (Teymori & Fardin, 2020), since the pandemic many efforts have been made to apply this type of training around the world (Mäkelä et al., 2020). Even in a post-pandemic phase, training may yet, on a global level, undergo several changes (Teymori & Fardin, 2020).

Distance learning is defined as formal institutional education where the learning group is separate, and where interactive telecommunication systems are used to connect learners, resources, and trainers (Simonson, 2009). In that learning is accessed over the Internet, anytime and anywhere, it can be classified as a distance learning system (Lu & Dzikria, 2019). The term distance learning has been applied to a variety of programmes that serve numerous audiences through a wide variety of media (Simonson et al., 2015). Rapid changes in technology, however, have challenged traditional ways of defining distance training (Simonson et al., 2015). Although it can bring numerous benefits, it also presents some difficulties (Afrouz & Crisp, 2020; Davis et al., 2018; Lazorak et al., 2021; Paudel, 2021; Song et al., 2004). For example, online education is able to provide knowledge to anyone, anywhere, as long as they have access to the Internet (Paudel, 2021), allowing flexibility for those living in remote locations and those with family and community commitments (Afrouz & Crisp, 2020). However, it requires considerable technological skill from both trainees and trainers (Afrouz & Crisp, 2020; Song et al., 2004). For instance, the flexibility this modality allows requires greater control of time, space, and the pace of trainees; as well as greater trainee motivation to continue the course (Martins et al., 2019; Song et al., 2004). In fact, in the online training modality, the dropout rate is considered high (Lu & Dzikria, 2019).

Planning and design of training programmes

Training plays a vital role in developing human resources and generating new knowledge (Perez-Soltero et al., 2019). When designing and implementing training programmes, it is crucial to ensure that they deliver a valuable training experience (Simonson et al., 2015). Evaluating the effectiveness of a training programme involves systematically assessing its value and impact (Kirkpatrick & Kirkpatrick, 2006; Yarbrough et al., 2011). To maximize the training effectiveness, careful planning, which includes needs assessment, goal definition, subject content identification, and programme evaluation, is necessary (Kirkpatrick & Kirkpatrick, 2006).

To prepare psychologists for immediate action, it is essential to evaluate them before, during, and after the training process. Pre-training assessments help identify specific training needs, while feedback gathered during the training assesses content comprehension, trainee commitment, and overall satisfaction. Evaluations from intervention clients provide valuable insights into trainees' performance in real-world contexts. The literature underscores the significance of evaluating these stages (Kirkpatrick & Kirkpatrick, 2006; Kunche et al., 2011; Rae, 1999). Assessment of needs’ questionnaires are considered a facilitative method compared to other approaches (Kirkpatrick & Kirkpatrick, 2006).

The evaluation of effectiveness often follows Kirkpatrick and Kirkpatrick's Four-Level Evaluation Model, which includes reaction, learning, behaviour, and outcomes (Kirkpatrick & Kirkpatrick, 2006; Perez-Soltero et al., 2019). Reaction assesses trainees' response to the training, emphasizing the importance of a positive reaction. Learning evaluates changes in attitudes, knowledge, and skills. Behaviour examines the application of acquired knowledge and skills in practice. Outcomes refers to the final results of the training, which should align with the intended objectives. Learning can be assessed through tests, while behaviour can be evaluated through questionnaires administered to those who interact with the trainees. The results are analysed based on the intended impact of the training (Kirkpatrick & Kirkpatrick, 2006).

Objectives

This pilot study presents an online training programme methodology for training psychologists in effective career interventions with the unemployed. The study aims to accomplish the following objectives: (1) assess trainees’ initial knowledge levels and track knowledge acquisition and engagement during the learning process; (2) analyse trainees' performance and their perception of training quality; and (3) present the results of the psychologists' training based on the results of the intervention group where they implemented the intervention and on their professional performance. Importantly, this pilot study focuses on assessing the feasibility of the online training programme and does not propose inferential statistical tests or hypotheses (Leon et al., 2011).

Training programme

The training aimed to prepare psychology professionals in theoretical, scientific, and technical competencies for psychological interventions in employability and career management with unemployed adults. It was accredited by the Portuguese Psychologists' Association (OPP) in the areas of Educational Psychology, Work, Social and Organizational Psychology, and Vocational and Career Development Psychology.

The training aimed to achieve specific objectives, including: understanding recent theoretical and empirical perspectives on employability within the intervention framework; addressing specific needs in group interventions for economically and socially vulnerable populations; considering the cultural heritage and contextual needs of the target population; identifying and describing the effects criteria and crucial components of career interventions; comprehending challenges related to using communication technologies in such interventions; and recognizing, reflecting on, and implementing strategies and activities outlined in the career psychological intervention, along with the associated variables and evaluation methods.

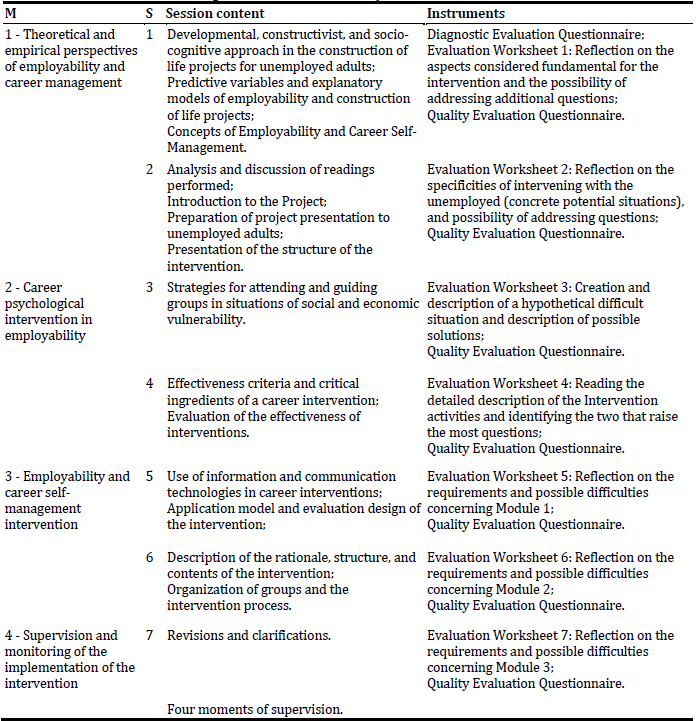

To achieve the above-mentioned objectives, the training was structured in four modules. The training was divided into seven 6-hour sessions, for a total of 42 hours of training (Table 1). In modules 1, 2, and 3, half of each session was asynchronous. The training also included 18 hours of supervision in module 4, which only had one asynchronous session.

The training methodology incorporated autonomous learning, collaborative learning, continuous monitoring, and practical activities. Trainees were encouraged to independently construct knowledge, and reflect on their learning profile and objectives. Collaborative learning involves exchanging experiences and interacting with the group (trainees/trainers) based on professional and personal interests. Continuous monitoring was ensured through a sequential training model with trainers observing the learning process. Practical activities encompassed synchronous session participation and asynchronous exercises. Trainees accessed multimedia content presented by trainers, completing corresponding activities and exercises, and actively engaging in proposed activities and synchronous sessions.

Participants and Procedures

The training and intervention were carried out under the Careers Project (ALG-06-4234-FSE-000047), a social impact partnership to support employability in the Algarve, Southern Portugal. The Project was approved by an ethics committee (CEICSH 002/2022). The training to prepare the Psychologists for the intervention took place between February and March 2022, and was delivered in e-learning. The intervention took place between March and April 2022.

The trainee recruitment process was led by the project coordinator, with a PhD in Vocational Psychology and Career Development, and included a curriculum analysis and an interview. The selected trainees were informed of their results and received the training manual, providing their consent form before answering the questionnaires. In the first training session, the Sociodemographic Questionnaire and the Diagnostic Evaluation Questionnaire were applied. During each asynchronous session, trainees were asked to fill out the Evaluation Worksheet, along with the Quality Evaluation Questionnaire. The training was assisted by a group chat which enabled interaction amongst trainees and trainers between sessions. After the training, the trainees were divided into groups, based on the location of the intervention. In each group, the trainees assumed either the role of psychologist or assistant psychologist.

The sample size was determined based on pragmatic considerations and the exploratory nature of the pilot study (Leon et al., 2011). Thus, the trainees were eight Psychology professionals, who were hired to implement the intervention. Five of these were psychologists and members of the OPP, one was attending the internship for access to the OPP, one had finished a Master’s Degree in Vocational Psychology and Career Development, and one was finishing a Master's degree in Psychology. All of them worked in different regions of the country (n = 2 north; n = 2 centre; n = 2 south), with a range of experience between zero and 13 years (M = 4.25 years, SD = 4.4). The trainees were all female and of Portuguese nationality, aged between 24 and 39 years (M = 30.75; SD = 4.9). The six trainers were also all female and Portuguese, aged between 25 and 63 years (M = 39.83; SD = 14.0). Three of the trainers were doctorates with an advanced specialization in Vocational Psychology and Career Development; two were first-year doctoral students and one was a Master in Psychology. Altogether, they worked in the north of the country, with a range of experience between two and 40 years (M = 16, SD = 15.215).

The intervention clients were unemployed individuals invited by a governmental institution to attend a career intervention. Clients provided their consent forms before participating. During the intervention, the clients were asked to fill out the Intervention Quality Evaluation Questionnaire, after each session. The total number of intervention clients was 58, of whom 40 were female. These clients were aged between 19 and 64 (M = 44.60; SD = 10.32). Regarding their nationality, 52 were Portuguese, and 6 Brazilian. All of them were living in the Algarve, from a total of seven different counties. They were then assigned to four different groups in four different geographical areas, assuring proximity of their counties of residence.

Instruments

Given the need to evaluate the feasibility of the research approach and select appropriate outcome measures, it was necessary to develop our own instruments for the pilot study. This ensured that the specific research objectives were adequately addressed and that the data collected accurately reflected the intended variables (Leon et al., 2011).

Sociodemographic Questionnaire. This was designed to collect data on the trainees’ ages, nationalities, qualifications, experience, and current professional situations.

Diagnostic Evaluation Questionnaire

The diagnostic evaluation questionnaire was constructed for the purpose of assessing the trainees’ technical and theoretical knowledge. The questionnaire consists of 41 questions regarding scientific knowledge about Career Development Psychology (e.g., "Academic learning and achievement consist of a domain of career development skills in adulthood"). Answers range from true or false.

Evaluation Worksheets. This was designed to assess the participation, engagement, and knowledge acquired by the trainees during the training. The questions vary according to the objectives of the session to be assessed and are answered by free, open-ended responses (e.g., "Please indicate and justify two to three aspects that you think are key to the intervention"). The qualitative evaluation grid allows for a final quantitative evaluation based on the level of knowledge, involvement, and reflection demonstrated.

Quality Evaluation Questionnaire. This was designed to collect the trainees’ perceptions of the training, in terms of management and organization (i.e., hours of operation), objectives, needs, and utility (i.e., level of learning; level of importance of the work developed; complementary activities or content; utility of the learning), trainers (i.e., adherence to timetable, mastery of content, ability to communicate/expose; availability to clarify; ability to stimulate the motivation of the trainees; relationship with the trainees; general performance). The questions are answered on a six-point scale (1 = very poor, 6 = very good).

Intervention Quality Evaluation Questionnaire. This was designed to collect the perceptions on the quality of the intervention by the intervention clients, based on the quality of each session and on the performance of the psychologists. The questionnaire included one question, answered on a 10-point scale: “Please indicate how much you consider that this session contributed to solving the issue that motivated your enrolment in the Intervention”.

Data analysis

The Diagnostic Evaluation Questionnaire responses were evaluated and converted to a 0 to 20 scale. The Evaluation Worksheets were assessed by an independent jury of three members, who provided quantitative scores on a scale of 0 to 20 for each trainee's written answers using a qualitative evaluation grid. The trainees' final scores in each worksheet were calculated as the mean of the jury members' scores, and the means of each Evaluation Worksheet were determined. These data were then subjected to a non-parametric Wilcoxon test, as a means of comparing the evolution of the results by Session Worksheet. The quantitative data from the Quality Assessment Questionnaire were converted to a 0 to 20 scale, while the qualitative data underwent content analysis to extract categories identified by the trainees. For the Intervention Quality Evaluation Questionnaire, the mean and median scores for each session were calculated for the trainee groups. Due to the nature of the pilot study, inferential statistical tests were not employed (Leon et al., 2011).

Results

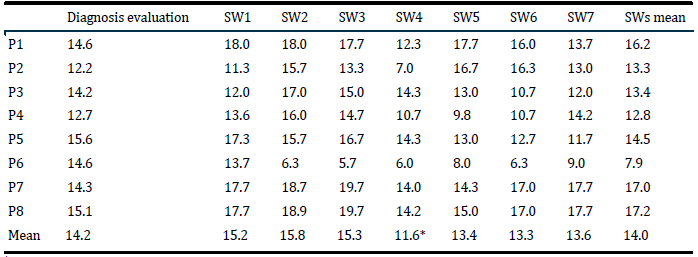

The trainees’ results in each of the different evaluation moments and the mean score of total evaluations are presented in Table 2. The results indicate that out of the eight trainees, P3, P5 and P6 lowered their performance from the beginning to the end of the training (only P6 achieving what can be considered negative results). All the other trainees increased their results throughout the training. On the individual level, and in comparison with the diagnosis evaluation, P1 registered higher results in all the session worksheets, especially in SW1 and SW2, apart from SW4 and SW7. P2 also registered comparatively higher results in all the session worksheets, especially in SW5 and SW6, except in SW1 and SW4. P3 registered the highest results in SW2 and the lowest in SW6. P4 registered, comparatively, higher results in most of the session worksheets, especially in SW2, apart from SW4, SW5, and SW6. P5 registered the highest results in SW1 and SW3 and the lowest results in SW7. P6 registered lower scores throughout the training - especially from SW2 onward. Finally, P7 and P8 registered higher results in all the session worksheets (especially in SW3), apart from SW4 and SW5. In a general analysis by session worksheet, all the trainees except P6 improved their results, when compared to the diagnosis evaluation, in SW2 and SW3. Also, all the trainees except P3, lowered their learning results in SW4. The Wilcoxon test revealed a statistically significant reduction from SW3 to SW4, z = -2.380, p < 0.05.

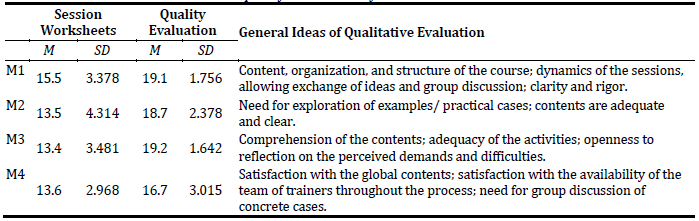

Table 3 presents the means of the trainees’ evaluation by module, as well as the mean of each module’s quality evaluation and the themes most referred to in the qualitative evaluation. Globally, the trainees had the highest results in M1, followed by M4, M2, and M3. The highest spread of scores was registered in M2. The trainees’ satisfaction with the quality of the training indicates that M3 registered the highest level of satisfaction, followed by M1, M2, and M4.

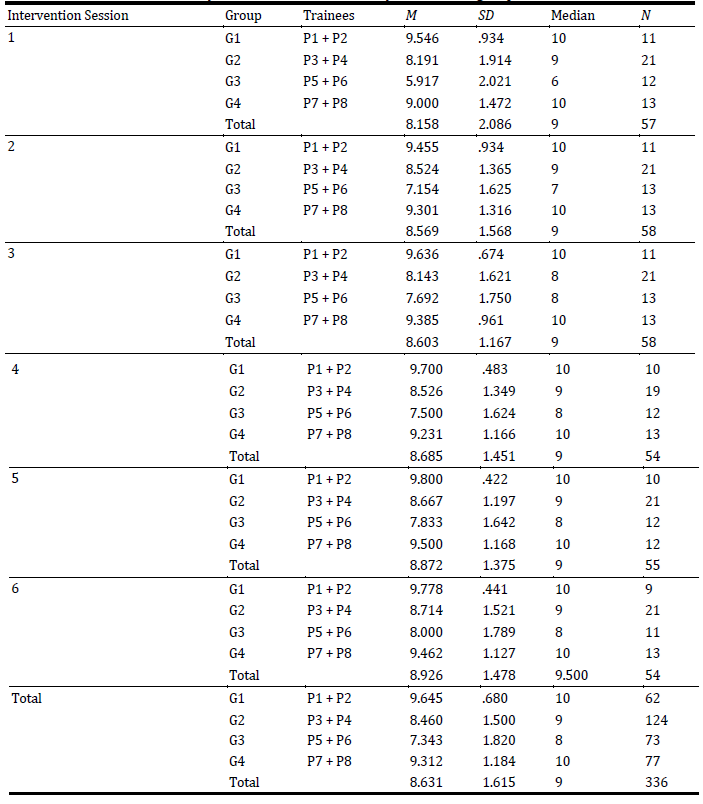

Given the fact that every intervention session was dynamized by two psychologists, the clients evaluated the trainees in groups. In each group, the first trainee assumed the role of psychologist, and the second the assistant psychologist. Thus, Table 4 presents the intervention clients’ evaluation of the trainee groups. At an individual level, G1 registered the highest results in terms of the intervention clients’ evaluation, followed by G4 and G2, respectively. On the other hand, G3 registered the lowest results throughout the intervention, when compared with the other groups. In addition, we must highlight the fact that, throughout the intervention, G1 and G4 had a median of ten; and that G3 had the highest spread of scores.

Discussion

This pilot study introduced an online training programme aimed at equipping psychologists with the skills required for conducting effective career interventions with unemployed individuals. The study had three main objectives: (1) to evaluate the trainees' initial knowledge level and track their knowledge acquisition and engagement during the training; (2) to analyse the trainees' performance and gather their perception of the training quality; and (3) to assess the results of the psychologists' training based on those of the intervention group where they implemented the intervention and on their professional performance.

Concerning the study’s first goal, the results indicated that five of the eight trainees increased their performance from the beginning to the end of the training, with seven of them showing improved results when compared to the diagnosis evaluation in at least two sessions. The evolution of the trainees’ performances can be attributed to the evaluations carried out before and during the training. As expected, the diagnostic evaluation allowed the acknowledgment of the specific needs of the trainees and the adjustment of the training course accordingly (Kirkpatrick & Kirkpatrick, 2006; Kunche et al., 2011). The knowledge areas needing more focus during the training were identified, allowing the adjustment of each session's activities and objectives accordingly by the trainers. Simultaneously, the assessment of the trainees’ engagement and learning during the training, was also important to identify areas that required greater or lesser exploration. These indicators are especially important in online training due to the challenges posed by high dropout rates (Lu & Dzikria, 2019) and trainees' autonomy in navigating their own learning paths (Paudel, 2021). In this study, the assessment instruments were protective of these critical factors and appear to have contributed to the positive outcomes. Furthermore, the differentiation of results found in the sessions may be due to the content of the training itself. In this case, the results of the second session were the highest, and those of the fourth the lowest. The second session was dedicated to presenting the career intervention that the trainees would later implement. For this reason, the trainees may have more easily perceived the practical usefulness of the session, becoming more actively involved in subsequent activities and consequently achieving more knowledge. On the other hand, the fourth session concerned the presentation of critical ingredients of career interventions. The results indicate that the trainees may not have perceived how to use this practical knowledge, demonstrating therefore poorer results, which were then confirmed by the negative significance of the Wilcoxon test. Moreover, the results also indicate a fluctuation in trainee knowledge and involvement throughout the training. This may simply relate to the fact that trainees perceived more difficulties in the topics covered in the sessions where they recorded lower results. However, individual analysis of trainees with performance fluctuations reveals more important details. For example, the trainee whose evaluation was negative as of the second worksheet (i.e., P6), more than a lack of knowledge acquisition, revealed a lack of involvement. What was observed in practice was a gradual disinvestment in the worksheets, where from session to session increasingly brief and superficial answers were presented. There was less and less active participation in synchronous sessions, which included having the camera turned off. This analysis of results leads us to believe that a lack of responsibility, motivation, and willingness towards training represents a challenge in autonomy, an essential prerequisite for successful learning (Lazorak et al., 2021). It is therefore understood that participants’ success in online training can be favoured if previous and regular evaluations are made with a view to adjusting the training to the trainees (Kirkpatrick & Kirkpatrick, 2006; Kunche et al., 2011); that the perception of practical usefulness in the session contents seems to favour motivation for trainee acquisition of knowledge and involvement (Edelson & Joseph, 2004); and, finally, that the characteristics of online training may not be suited to all types of participants (Lu & Dzikria, 2019).

As for the second goal, both the trainees’ overall performance, and the quality evaluation they made were positive. Concerning performance, the results of the first module were higher. As observed earlier, scores fluctuating across modules may indicate the variable trainee engagement due to their perceived usefulness of module content (Edelson & Joseph, 2004). The diagnostic assessment revealed that trainees expressed the need for more training on the theoretical and empirical perspectives of employability and career management, which was covered in the first module where their overall performance was higher. However, their performance in modules 2, 3, and 4 was consistently above average, suggesting moderate engagement as many trainees may have had prior familiarity with the topics. Regarding the trainees' perceived quality of the training, all modules received positive feedback. Specifically, in modules 1, 3, and 4, trainees expressed satisfaction with the four training vectors (autonomous learning, collaborative learning, continuous monitoring, and practical activities). In the first module, trainees appreciated the dynamic session atmosphere, which fostered idea exchange, as well as the trainers' clarity of content and rigour, supporting collaborative learning.

In the third module, the trainees pinpointed their satisfaction with the practical activities and autonomous learning by their perception of the openness to reflect on the demands and difficulties of the training. Finally, in the fourth module, trainees highlighted their satisfaction with the continuous monitoring of the trainers throughout the process. This feedback confirms that the training design is effective, with these vectors being the key factors for trainee satisfaction and commitment. The ideas expressed by the trainees align with existing research on challenges in online training (Afrouz & Crisp, 2020; Davis et al., 2018; Song et al., 2004). It is important to consider these challenges as they can impact positive outcomes, retention, and engagement for trainers (Davis et al., 2018). The trainees have expressed their needs for more practical cases in the modules that they were less satisfied with - specifically, modules 2 and 4 - which reinforces the importance of providing practical content that can be directly applied to their work. Module 2 covered strategies for guiding and supporting the intervention's target population, as well as technical aspects of the intervention, while module 4 focused on revisions and supervision. The very nature of this content, addressed in a more expository, less active approach by the trainers, could have had an impact on the trainees’ learning (Freeman et al., 2014). Observing the results in terms of performance and quality evaluation, we must highlight that despite module 3 being the most satisfactory according to trainees, their evaluation was the lowest in that module. One could argue that the reason for this preference was due to the active teaching methods used during the sessions. The group discussions facilitated by the module may have encouraged the trainees to be more engaged and reflective, rather than simply finding the topics easier.

Regarding the third goal, the results indicate that the trainees with a better overall training performance were those whose intervention group received a better evaluation by the intervention clients. A lower evaluation by the clients indicates that their motivation for participating in the intervention was not completely met. In this study, the groups of trainees with no negative training results registered the highest evaluation by the intervention clients. The groups with the highest-performing trainees (i.e., G1 and G4) registered the highest median evaluation scores (i.e., ten) from the clients. G2 also had a good evaluation, with a median score of 9. These evaluations by the clients reveal satisfaction and the perception that their expectations for participating were met. G3, who received the intervention by P6, was the exception, registering the highest spread of scores, and the lowest evaluation throughout the intervention. This may indicate that P6’s lower evaluation in the training resulted in a lower performance during the intervention, leading to a lower group evaluation by the intervention clients. The results demonstrate that training affects preparing psychologists for the intervention, as trainee performance appears to be linked to client evaluations of how well their expectations were met. This underscores the critical role of professional performance in achieving positive outcomes for clients (Behrendt et al., 2021). In general, all trainee groups were positively evaluated by the intervention clients. The evaluation distinguished between the performance of trainees in the field, which reflected their results in the training. Interestingly, the inclusion of two psychologists in each intervention group appeared to have a positive influence on the results. This finding highlights the importance of collaboration and shared responsibility in achieving positive outcomes for clients, as the results of the group seemed to depend on the performance of both professionals rather than just one. This approach may also help prevent subpar performance by professionals, emphasizing the value of teamwork and mutual support in delivering high-quality services.

Limitations and future directions

Although the findings are encouraging, it is important to acknowledge certain limitations. Firstly, the pilot study relied on the responses of only eight psychologists, who were specifically recruited for the career intervention and required to undergo the training. Consequently, generalizing these results may not be feasible. Moreover, the recruitment process itself might have introduced selection bias, as only individuals meeting specific criteria were included, due to a limited number of available positions. These factors, including the small sample size and potential selection bias inherent in pilot studies, may restrict the generalizability of the findings to a broader population of psychologists. Second, as a pilot study, this research did not involve inferential statistical tests or hypothesis testing. Therefore, the results should be interpreted cautiously, as they do not provide definitive conclusions or p-values (Leon et al., 2011). Furthermore, it is important to acknowledge that this pilot study was exploratory in nature and aimed to assess the feasibility of the online training programme and the effects of the career interventions. The limited prior knowledge and lack of established protocols in this area may have influenced the reliability and validity of the findings (Leon et al., 2011). In light of these limitations, future research should consider conducting larger-scale studies with a more diverse sample of psychologists to enhance the generalizability of the results. Additionally, incorporating inferential statistical tests and utilizing established protocols can provide more robust evidence regarding the effectiveness of the career intervention. Overall, while the findings of this pilot study are promising, it is crucial to recognize the limitations inherent in the study design and sample size. These limitations should be taken into account when interpreting the results and planning future research endeavours.

Conclusions

The training programme achieved positive outcomes in terms of trainee evaluations, perceived quality, and overall satisfaction. The majority of trainees demonstrated progress in their learning, leading to favourable evaluations from intervention clients. This success enabled high-performing trainees to excel in real-world interventions. The study effectively assessed the suitability of training content, trainee learning processes, and the impact of training on-field performance. By presenting the methodology, contents, and results of the training programme, this study contributes to the existing literature in an area where training needs are widely acknowledged.

Data availability statement

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to their containing information that could compromise the privacy of research participants.

Credit authorship contribution statement

Pedro Gaspar: Conceptualization; Formal analysis; Methodology; Project administration; Resources; Writing - Original Draft. Catarina Carvalho: Data Curation; Formal analysis; Investigation; Methodology; Project administration; Resources Writing - Original Draft. Célia Sampaio: Formal analysis; Investigation; Project administration; Resources; Software; Writing - Review & Editing. Maria do Céu Taveira: Supervision; Validation; Writing - Review & Editing. Ana Daniela Silva: Conceptualization; Funding acquisition; Investigation; Methodology; Supervision; Validation; Visualization; Writing - Review & Editing.